Excel in Python: Easy Pandas Data Import Guide

Unlock the power of data manipulation with this simple guide to importing data into Python using Pandas, a cornerstone for data analysis in Python. Whether you're dealing with CSVs, Excel spreadsheets, or databases, Pandas simplifies the process, allowing you to focus on analysis rather than data wrangling. In this post, we'll explore the essentials of data import and how to handle common scenarios effectively.

Introduction to Pandas and Data Import

Pandas is a versatile, powerful Python library designed for data manipulation and analysis. Its DataFrame object is key to structuring data in an accessible way, similar to how you might use a spreadsheet. Here’s how to get started:

- Install Pandas:

pip install pandas - Import it into your Python script or interactive environment:

import pandas as pd

Importing Data from CSV

CSV (Comma-Separated Values) files are a common format for storing data. With Pandas, reading these files is straightforward:

data = pd.read_csv('path/to/yourfile.csv')Here's what you can customize:

- Delimiter: Use

sep=';'for semicolon-separated files. - Header:

header=Noneif your file doesn't have a header row. - Skip rows:

skiprows=[1, 2]to skip the first two rows. - Encoding:

encoding='utf-8'to specify character encoding.

Importing Data from Excel

Excel files are ubiquitous in data analysis. Here's how you can import data from them:

data = pd.read_excel('path/to/yourfile.xlsx', sheet_name='Sheet1')Some common parameters include:

- Sheet:

sheet_name=1or'Sheet1'to specify which sheet to read. - Header:

header=Noneif your Excel sheet lacks headers.

🔎 Note: Ensure that you have the openpyxl library installed for reading Excel files (pip install openpyxl).

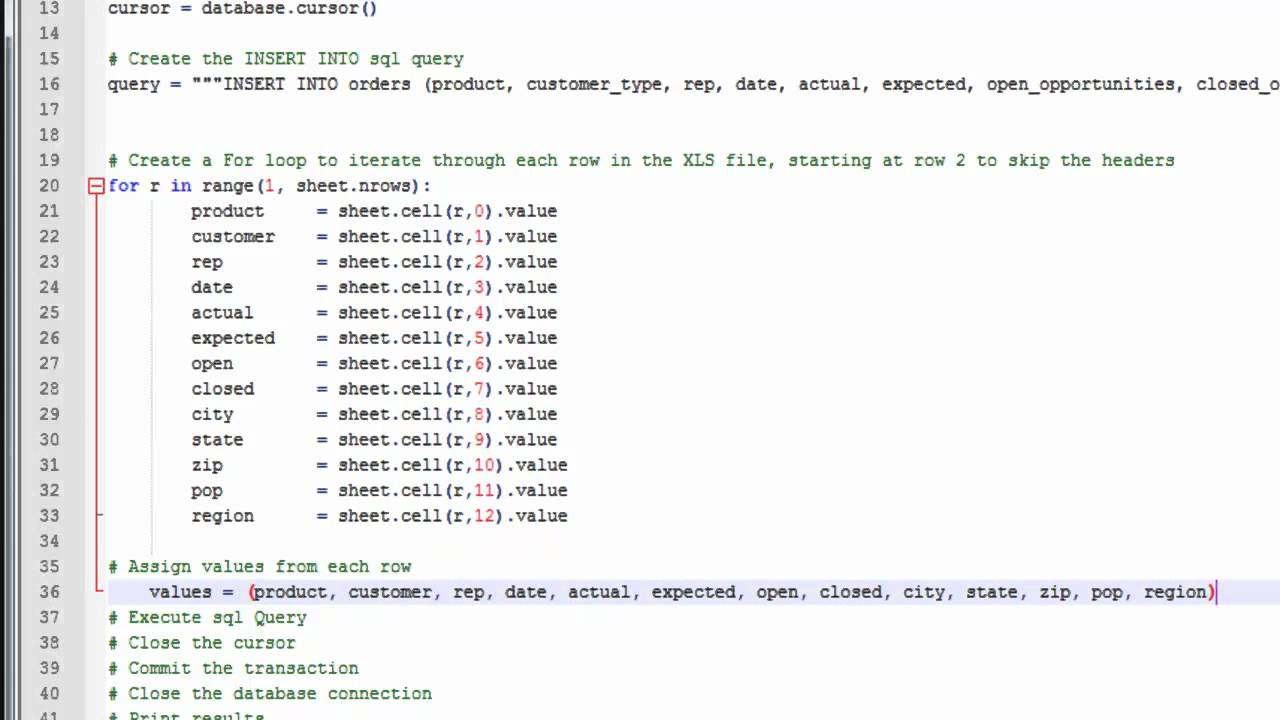

Importing Data from Databases

Importing data from databases is seamless with Pandas:

import sqlite3

conn = sqlite3.connect('database.db')

data = pd.read_sql_query('SELECT * FROM table', conn)- Database Engine: Adjust the connection code for MySQL, PostgreSQL, etc.

- Query: Use

read_sql_querywith SQL syntax.

Dealing with Different Data Formats

Not all data comes in common formats like CSV or Excel. Here’s how you can handle different types:

| Format | Function | Example |

|---|---|---|

| JSON | pd.read_json |

pd.read_json('data.json', orient='records') |

| HTML | pd.read_html |

dfs = pd.read_html('webpage.html') |

| XML | pd.read_xml |

pd.read_xml('data.xml') |

Handling Common Data Issues

Here’s how to manage common data import challenges:

- Missing Data:

data = pd.read_csv('path/to/yourfile.csv', na_values=['-', 'null']) - Columns with Spaces or Special Characters:

data = pd.read_csv('path/to/yourfile.csv', skipinitialspace=True) - Large Files:

data_iterator = pd.read_csv('path/to/yourfile.csv', iterator=True, chunksize=1000) for chunk in data_iterator: # Process each chunk - Datetime Conversion:

data = pd.read_csv('path/to/yourfile.csv', parse_dates=['date_column'])

⚠️ Note: Handling large datasets in chunks is particularly useful for memory efficiency.

Advanced Import Techniques

Here are some more sophisticated ways to handle data import:

- Merging Files:

import glob from functools import reduce file_list = glob.glob('path/to/*.csv') df_list = [pd.read_csv(f) for f in file_list] merged_data = reduce(lambda df1, df2: pd.merge(df1, df2, how='outer'), df_list) - Regular Expressions: Use

pd.read_csv('file.csv', header=None, sep='\s+')for space-separated files with irregular spacing. - Custom Functions: For unique data formats, you can define a custom function to read and format the data.

def custom_reader(filepath): # Custom logic for reading file # ... return data data = custom_reader('path/to/yourfile.txt')

💡 Note: Advanced techniques require a solid understanding of Python and data structures.

In this detailed guide, we’ve explored how to leverage Pandas for importing data from various sources. From basic CSV and Excel files to more complex formats and databases, Pandas offers robust tools to streamline your data analysis workflow. Remember to handle common issues like missing values, date parsing, and memory management when dealing with large datasets. Whether you’re an analyst, a scientist, or a developer, mastering these data import techniques will significantly enhance your productivity in Python.

Can I import multiple Excel sheets at once?

+

Yes, you can use pd.read_excel(‘file.xlsx’, sheet_name=None) to read all sheets into a dictionary where keys are sheet names, and values are DataFrames.

How do I deal with headers spanning multiple rows?

+

Use the header parameter to specify which rows to use for the column names. For example, pd.read_csv(‘file.csv’, header=[0, 1]) to use the first two rows as multi-index headers.

What if my data is in an unfamiliar format?

+

For unique or proprietary formats, you might need to write a custom function to parse the file or use third-party libraries to convert the data to a compatible format before importing with Pandas.