5 Ways to Link Websites to Excel Sheets

In the ever-evolving world of data analysis and office productivity, integrating various tools to streamline workflows has become a crucial skill. One of the most common pairings in this domain is linking Excel spreadsheets to websites. This allows users to gather real-time data, automate updates, or enhance data manipulation capabilities. Here, we will delve into five methods to achieve this integration, offering a comprehensive guide to help you leverage the powerful combination of web-based data and Excel's analytical prowess.

1. Web Scraping with Excel Formulas

Excel does not natively support web scraping, but with some clever use of formulas, you can indeed pull data from web pages.

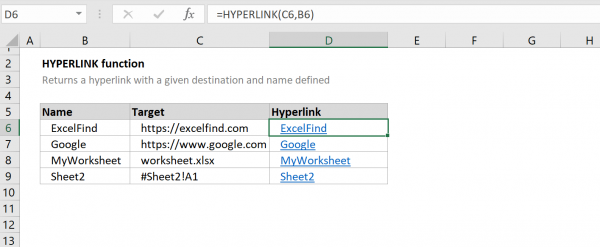

- WEBSERVICE Function: Use this function to fetch data from a URL that outputs XML or JSON data.

=WEBSERVICE("URL_HERE") - FILTERXML Function: After fetching the data, use FILTERXML to parse XML data or regular expressions to parse JSON.

🌐 Note: Not all websites support XML/JSON output, so this method is limited to specific APIs or websites designed for data extraction.

2. Power Query

Power Query, part of Excel's Power BI suite, offers robust web scraping and data transformation capabilities:

- From the Data tab, select Get Data > From Other Sources > From Web.

- Enter the URL of the website, and Power Query will attempt to interpret and present the data in a format you can work with.

- Use Power Query's editor to clean, transform, and load the data into Excel.

⚙️ Note: Power Query works exceptionally well with structured data like tables, lists, or API responses.

3. Using Excel Add-ins

Several third-party add-ins can extend Excel's functionality:

- Power Tools: This add-in can help scrape web pages through a wizard-based interface.

- Web Data Extractor: A tool specifically designed for web scraping, which can export data directly into Excel.

- Other Add-ins: Various plugins available online, like Easy WebData Harvester or web scraping wizards.

🧰 Note: Always verify the add-in's compatibility with your version of Excel and ensure its data handling complies with the website's Terms of Service.

4. VBA Macro for Web Scraping

For more complex scenarios, VBA macros offer a custom approach:

- Open the VBA editor (Alt + F11) in Excel.

- Create a new module and insert code that:

- Opens a web browser.

- Navigates to the website.

- Extracts the required data using HTML object manipulation.

- Places the data into Excel cells.

🛠️ Note: VBA requires an understanding of programming concepts, but offers endless customization possibilities.

5. Data Connection with External Tools

Using external tools or software to scrape data and import it into Excel can be effective for large-scale or dynamic data needs:

- Google Sheets: Can scrape data through custom scripts or add-ons, then the data can be imported into Excel.

- APIs: Many websites provide APIs which, when used with tools like Postman or any programming language's API library, can fetch data and then export it to Excel.

- Web Scraping Software: Tools like ParseHub, Octoparse, or ScraperAPI can collect data from websites, export in a format like CSV, and then open in Excel.

🔧 Note: This method requires additional software setup but can significantly expand your data gathering capabilities.

Wrapping Up

Linking websites to Excel sheets not only enriches your data analysis toolkit but also automates the process of data collection. Whether through straightforward Excel formulas, robust Power Query tools, add-ins, custom VBA macros, or external software, you now have a comprehensive set of strategies to integrate web data into your spreadsheets. This can enhance your productivity, ensure data accuracy, and reduce manual entry errors, allowing you to focus on deriving insights from the data you've gathered. Remember, the choice of method should align with the complexity of the task, the nature of the data, and your comfort level with the tools involved.

Can I automate updates from a website to Excel?

+

Yes, with methods like Power Query or VBA macros, you can set up automated updates to pull new data from websites at regular intervals.

Is web scraping legal?

+

Legality varies by website and jurisdiction. Always check a website’s terms of service for any restrictions on data scraping, and be mindful of copyright and privacy laws.

Which method should I choose for pulling data from a website?

+

Choose based on complexity, desired automation level, and your comfort with tools. Start with Excel formulas or Power Query for simpler needs, and opt for external tools or VBA for more complex scenarios.

Can I scrape data from sites with dynamic content?

+

VBA macros, APIs, and specific web scraping software can handle dynamic content, but it might require JavaScript execution or direct interaction with the page’s DOM.

What are some common pitfalls to avoid when linking Excel to websites?

+

Avoid scraping copyrighted material, ensure your scripts respect website usage limits, and be aware of potential changes in the website’s structure affecting data extraction.