5 Ways to Read Excel Sheets in PySpark

Introduction to Reading Excel Files with PySpark

Working with Excel files in PySpark might seem daunting at first, but with the right tools and approaches, it can be an efficient process. PySpark, being an interface for Apache Spark, provides a powerful framework for processing large datasets, including those from Excel spreadsheets. This post will guide you through 5 different methods to read Excel files into PySpark, ensuring you have the flexibility to choose the method that best suits your needs.

1. Using PySpark’s spark.read.format()

The simplest way to read Excel files in PySpark is through the spark.read.format('com.crealytics.spark.excel') function. Here's how you can do it:

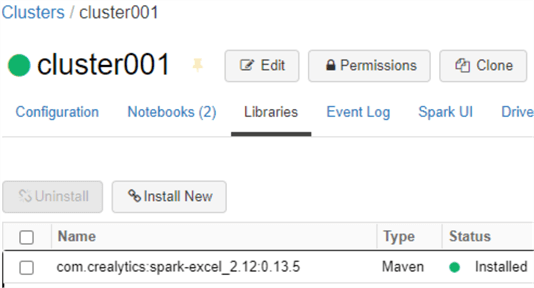

- Install Dependencies: Before you start, ensure you have the necessary libraries installed. You will need to add the

com.crealytics:spark-excel_2.12:0.13.5to your Spark session's configuration.

spark.read.format("com.crealytics.spark.excel")

.option("header", "true")

.option("inferSchema", "true")

.load("path/to/your/file.xlsx").show()

💡 Note: Make sure to adjust the version of spark-excel to match your Spark version.

2. Using pandas DataFrame and Converting to PySpark

If you're more comfortable with pandas, you can load Excel data into a pandas DataFrame and then convert it into a PySpark DataFrame:

- Import Required Libraries:

- Load into Pandas: Use pandas to read the Excel file.

- Convert to PySpark DataFrame:

import pandas as pd

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("pandas-to-pyspark").getOrCreate()

# Load Excel file into pandas DataFrame

pandas_df = pd.read_excel("path/to/your/file.xlsx")

# Convert pandas DataFrame to PySpark DataFrame

pyspark_df = spark.createDataFrame(pandas_df)

pyspark_df.show()

3. Using Apache POI with Custom Code

Apache POI can be used to read Excel files when you need more control over the data reading process. Here’s how to implement this:

- Install POI: You'll need to download and add Apache POI JAR files to your project.

- Read Excel: Use Python to read the file and then construct a PySpark DataFrame from it.

import os

from pyspark.sql.types import *

from pyspark.sql import SparkSession

from org.apache.poi.ss.usermodel import WorkbookFactory

from org.apache.poi.xssf.usermodel import XSSFWorkbook

spark = SparkSession.builder.appName("apache-poi").getOrCreate()

# Read Excel file using POI

workbook = WorkbookFactory.create(open("path/to/your/file.xlsx", "rb"))

sheet = workbook.getSheetAt(0)

# Define schema

schema = StructType([StructField("Column1", StringType(), True), StructField("Column2", StringType(), True)])

# Create an RDD with the data

data = []

for row in sheet.rowIterator():

row_values = [cell.getStringCellValue() for cell in row.cellIterator()]

data.append(row_values)

# Convert to DataFrame

pyspark_df = spark.createDataFrame(data, schema)

pyspark_df.show()

4. JDBC Connection to Excel File

Connecting to an Excel file through JDBC can be an effective approach when dealing with Microsoft Access database-like structures:

- Set up JDBC Driver: You'll need to include the JDBC-ODBC Bridge driver in your project.

- Establish Connection: Create a JDBC connection to your Excel file.

from pyspark.sql import SparkSession

spark = SparkSession.builder.appName("jdbc-excel").getOrCreate()

jdbcUrl = "jdbc:ucanaccess:///path/to/your/file.xlsx;UseJDBCCompliantTimezoneShift=true"

df = spark.read.jdbc(url=jdbcUrl,

table="Sheet1",

properties={"user": "admin", "password": ""})

df.show()

💡 Note: Ensure that the JDBC driver is compatible with your version of Java and Spark.

5. Using Third-Party Libraries

Several third-party libraries like openpyxl, xlrd, and excel can be used in combination with PySpark to read Excel files. Here’s an example with xlrd:

- Install xlrd: Use pip to install

xlrdpackage. - Read Excel File: Use xlrd to open the file and extract data.

- Convert to PySpark DataFrame:

import xlrd

from pyspark.sql import SparkSession

from pyspark.sql.types import *

spark = SparkSession.builder.appName("xlrd-example").getOrCreate()

# Open the workbook

wb = xlrd.open_workbook("path/to/your/file.xlsx")

sheet = wb.sheet_by_index(0)

# Define schema

schema = StructType([StructField("Column1", StringType(), True), StructField("Column2", StringType(), True)])

# Extract data into a list

data = []

for row in range(1, sheet.nrows):

data.append([sheet.cell_value(row, 0), sheet.cell_value(row, 1)])

# Create PySpark DataFrame

df = spark.createDataFrame(data, schema)

df.show()

In the rapidly evolving landscape of big data processing, PySpark stands out as a versatile tool for handling various data formats, including Excel sheets. This post has detailed five different methods to read Excel files into PySpark, catering to various scenarios and data extraction needs. Whether you’re working with large datasets, needing detailed control over Excel specifics, or simply seeking an efficient method, PySpark offers the tools to manage your Excel data with ease.

Understanding these methods provides you with a toolkit for data integration, transformation, and analysis, ensuring that your data pipeline can adapt to any requirement. By leveraging PySpark, you not only boost the efficiency of your data handling but also scale your operations seamlessly across clusters.

What are the prerequisites for reading Excel files in PySpark?

+

Ensure you have Spark installed, and depending on the method you choose, you might need to install additional libraries like ‘spark-excel’, ‘xlrd’, or JDBC drivers.

Can I read Excel files with multiple sheets using PySpark?

+

Yes, you can. Methods like using Apache POI or third-party libraries allow you to specify which sheet you want to read, or you can loop through multiple sheets if needed.

What if my Excel file has a complex structure?

+

For complex Excel files, you might need to use Apache POI or similar libraries which offer greater control over the reading process. This allows you to manage merged cells, different data types, or formulas.