Import 6000 Excel Data Points Quickly

Ever found yourself staring at an Excel file with thousands of data points that you need to import into another system or database? If you've experienced this daunting task, you're not alone. In today's digital age, handling large datasets is a common challenge in business, research, and various other fields. Here's how you can manage to import 6000 Excel data points quickly and efficiently:

Preparing Your Excel File

Before diving into the actual import process, it's crucial to ensure that your Excel file is in the best possible shape:

- Clean Your Data: Check for duplicates, blank rows, and any outliers that might need correction.

- Format Correctly: Ensure data types match the expected formats (e.g., dates, numbers, text).

- Consistent Headers: Make sure your headers are standardized, as they'll be used as field names during import.

- Remove Special Characters: Special characters in column names can sometimes cause issues during import.

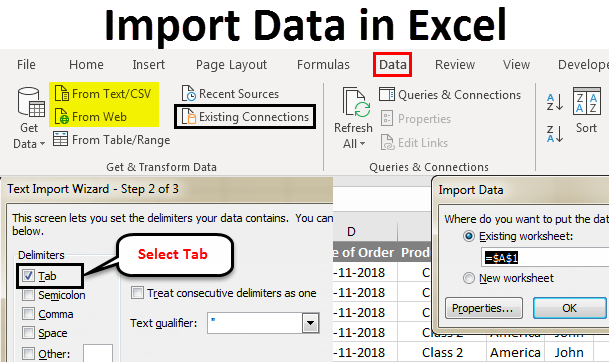

Using Excel's Built-in Tools

Excel itself provides several features that can help streamline the import process:

- Text Import Wizard: This tool helps you define how Excel interprets the data, especially useful for csv or txt files.

- Get & Transform: Access the Power Query editor to perform data transformation tasks before import.

- Data Tab Features: Use the Data Validation, Remove Duplicates, and Text to Columns tools for pre-processing.

🔥 Note: Ensuring your data is clean and well-formatted can drastically reduce the time taken to import data.

Choosing the Right Import Method

1. Manual Copy-Paste

For smaller datasets or when you need to add data to existing entries:

- Select all data in Excel by pressing Ctrl + A.

- Copy the data with Ctrl + C.

- Navigate to your target platform, open a new or existing sheet, and paste the data.

This method is straightforward but time-consuming for larger datasets, making it less ideal for 6000+ data points.

2. Importing with Macros

Use Visual Basic for Applications (VBA) to automate the import process:

- Open the VBA editor with Alt + F11.

- Insert a new module and write a simple macro to handle the data transfer.

- Set up triggers or run the macro manually for the import.

Here's a basic example of a macro:

Sub ImportData()

Dim wbSource As Workbook, wbDestination As Workbook

Dim wsSource As Worksheet, wsDestination As Worksheet

Dim rngSource As Range, rngDestination As Range

'Open source workbook

Set wbSource = Workbooks.Open("C:\Path\To\Source.xlsx")

Set wsSource = wbSource.Worksheets("Sheet1")

'Open destination workbook

Set wbDestination = ThisWorkbook

Set wsDestination = wbDestination.Worksheets("Sheet1")

'Select Source Range

Set rngSource = wsSource.Range("A1").CurrentRegion

'Select Destination Range

Set rngDestination = wsDestination.Range("A1")

'Copy and paste values

rngSource.Copy rngDestination

'Close Source Workbook without saving

wbSource.Close False

'Adjust to fit data

With rngDestination.CurrentRegion

.AutoFit

End With

End Sub

3. Bulk Import with Scripts or APIs

For importing into databases or web applications, scripts or APIs can provide an efficient solution:

- CSV/Excel Import Scripts: Write scripts in languages like Python, PHP, or Ruby to import data. Libraries like Pandas for Python can read Excel files directly.

- REST APIs: If your platform supports RESTful services, you can automate data ingestion using API endpoints.

Here’s a simple Python script using Pandas:

import pandas as pd

# Read Excel file

data = pd.read_excel('data.xlsx', sheet_name='Sheet1')

# Assuming your database connection is set up

# Insert data into your database or API endpoint here

# For example:

for index, row in data.iterrows():

# Assuming you have a function to insert data

insert_data(row['Column1'], row['Column2'], ...) # etc.

💡 Note: For bulk operations, ensure your target system supports batch inserts or has API rate limits configured to handle large data.

4. Using ETL Tools

Extract, Transform, Load (ETL) tools can automate the entire process:

- Tools like Talend, Pentaho, or Alteryx can connect to Excel, transform the data, and load it into your target system.

- These tools often provide a graphical interface to design data flows and transformations.

Handling Errors

When dealing with large datasets, errors can be inevitable. Here are strategies to handle them:

- Data Validation: Use Excel's data validation to catch errors before import.

- Logging: Implement logging in scripts to capture where errors occur.

- Data Correction: Have mechanisms in place to flag and fix erroneous data points during or after the import process.

Wrapping Up

Importing 6000 data points from an Excel file can be a manageable task with the right preparation and tools. By cleaning your data, choosing an appropriate import method, and setting up systems to handle errors, you can drastically reduce the time and effort required. Remember, the key is to automate as much as possible, use built-in Excel tools, and when dealing with large datasets, consider using ETL tools or scripts for better efficiency.

What are the risks of manual data import?

+

Manual import increases the risk of human error, data corruption, loss of data integrity, and can be very time-consuming. Automation minimizes these risks.

How do I ensure data integrity during import?

+

By validating data in Excel before import, using automated scripts with error handling, and ensuring your target system supports data validation rules.

Can I undo an import if something goes wrong?

+

Most systems allow for rollback or deletion of recently imported data, but always have a backup of your original data before proceeding.

What if my data doesn’t fit into one spreadsheet?

+

You can use Excel’s consolidate feature or merge data manually before import. Alternatively, script-based solutions can handle multiple Excel files efficiently.

How often should I clean my data before import?

+

Always clean data before importing, especially when dealing with large datasets. Regular cleaning helps maintain data quality over time.